Chapter 8 Data Collection

Before any statistical analysis and vizualizations, robust data need to be collected. This important step often requires a proper experimental design, i.e. an experimental design that would assure relevant and meaningful data are obtained with maximum efficiency to answer our research questions. This chapter approaches all the required steps to reach such goal, from setting up the test (e.g. estimation of the number of panelists, design of sensory evaluation sessions and design of experiments), to the collection of data (through valuable execution tips) and its importation in a statistical software (R, here).

8.1 Designs of sensory (DoE) experiments

Like with any other chapter, let’s start by loading the {tidyverse}.

library(tidyverse)8.1.1 General approach

Sensory and consumer science relies on experiments during which subjects usually evaluate several samples one after the other. This type of procedure is called ‘monadic sequential’ and is common practice for all three main categories of tests (difference testing, descriptive analysis, hedonic testing). The main advantage of proceeding this way is that responses are within-subjects (data can be analyzed at the individual level) so that analysis and interpretation can account for inter-individual differences, which is a constant feature of sensory data.

However, this type of approach also comes with drawbacks37 as it may imply order effects and carry-over effects (Macfie et al. 1989). Fortunately, most of these effects can be controlled with a proper design of experiment (DoE). A good design ensures that order and carry-over effects are not confounded with what you are actually interested to measure (most frequently, the differences between products) by balancing these effects across the panel. However, it is important to note that the design does not eliminate these effects and that each subject in your panel may still experience an order and a carry-over effect, as well as boredom, sensory fatigue, etc.

Before going any further into the design of sensory evaluation sessions, it is important to first estimate the number of panelists needed for your study. For that, you may rely on common practices. For instance, most descriptive analysis studies with trained panelists are typically conducted with 10-20 judges, whereas 100 participants is usually considered as a minimum for hedonic tests. Of course, these are only ballpark numbers and they must be adjusted to the characteristics of the population you are interested in and to the specifics of your study objectives. In all cases, a power analysis would be wise to make sure that you have a good rationale for your proposed sample size, especially for studies involving consumers. The {pwr} package provides a very easy way to do that, as shown in the example code below for a comparison between two products on a paired basis (such as in monadic sequential design). Note that you need to provide an effect size (expressed here by Cohen’s d, which is the difference you aim to detect divided by the estimated standard deviation of your population).

library(pwr)## Warning: package 'pwr' was built under R version 4.1.3pwr.t.test(n=NULL, sig.level=0.05, type="paired", alternative="two.sided", power=0.8, d=0.3)##

## Paired t test power calculation

##

## n = 89.14938

## d = 0.3

## sig.level = 0.05

## power = 0.8

## alternative = two.sided

##

## NOTE: n is number of *pairs*For discrimination tests (e.g. tetrad, 2-AFC, etc.), the reader may also refer to the {sensR} package and its discrimSS() function for the sample size calculation in both difference or similarity testing context.

8.1.2 Crossover designs

For any sensory experiment that implies the evaluation of more than one sample, first-order and/or carry-over effects should be expected. That is to say, the evaluation of a sample may affect the evaluation of the next sample even though sensory scientists try to lower such effects by asking panelists to pause between samples and use of appropriate mouth-cleansing techniques (drinking water, eating unsalted crackers, or a piece of apple, etc.). The use of crossover designs is thus highly recommended (Macfie et al. 1989).

Williams’s Latin-Square designs offer a perfect solution to balance carry-over effects. They are very simple to create using the williams() function from the {crossdes} package. For instance, if you have five samples to test, williams(5) would create a 10x5 matrix containing the position at which each of three samples should be evaluated by 10 judges (the required number of judges per design block).

Alternately, the WilliamsDesign() function in {SensoMineR} allows you to create a matrix of samples (as numbers) with numbered Judges as row names and numbered Ranks as column names. You only have to specify the number of samples to be evaluated, as in the example below for 5 samples.

library(SensoMineR)

wdes_5P10J <- WilliamsDesign(5)## Rank 1 Rank 2 Rank 3 Rank 4 Rank 5

## Judge 1 1 5 4 2 3

## Judge 2 5 2 1 3 4

## Judge 3 2 3 5 4 1

## Judge 4 3 4 2 1 5

## Judge 5 4 1 3 5 2

## Judge 6 3 2 4 5 1

## Judge 7 4 3 1 2 5

## Judge 8 1 4 5 3 2

## Judge 9 5 1 2 4 3

## Judge 10 2 5 3 1 4Suppose you want to include 20 judges in the experiment, you would then need to duplicate the initial design.

wdes_5P20J <- do.call(rbind, replicate(2, wdes_5P10J, simplify=FALSE))

rownames(wdes_5P20J) <- paste("judge", 1:20, sep="")## Rank 1 Rank 2 Rank 3 Rank 4 Rank 5

## judge1 1 5 4 2 3

## judge2 5 2 1 3 4

## judge3 2 3 5 4 1

## judge4 3 4 2 1 5

## judge5 4 1 3 5 2

## judge6 3 2 4 5 1

## judge7 4 3 1 2 5

## judge8 1 4 5 3 2

## judge9 5 1 2 4 3

## judge10 2 5 3 1 4

## judge11 1 5 4 2 3

## judge12 5 2 1 3 4

## judge13 2 3 5 4 1

## judge14 3 4 2 1 5

## judge15 4 1 3 5 2

## judge16 3 2 4 5 1

## judge17 4 3 1 2 5

## judge18 1 4 5 3 2

## judge19 5 1 2 4 3

## judge20 2 5 3 1 4The downside of Williams’s Latin square designs is that the number of samples (k) to be evaluated dictates the number of judges. For an even number of samples you must have a multiple of k judges, and a multiple of 2k judges for an odd number of samples.

As the total number of judges in your study may not always be exactly known in advance (e.g. participants not showing up to your test, extra participants recruited at the last minute), it can be useful to add some flexibility to the design. Of course, additional rows would depart from the perfectly balanced design, but it is possible to optimize them using Federov’s algorithm thanks to the optFederov() function of the {AlgDesign} package, by specifying augment = TRUE. For example we can add three more judges to the Williams Latin square design that we just built for nbP=5 products and 10 judges, hence leading to a total number of nbP=13 judges. Note that this experiment is designed so that each judge will evaluate all the products, therefore the number of samples per judge (nbR) equals the number of products (nbP).

library(AlgDesign)

nbJ=13

nbP=5

nbR=nbP

wdes_5P10J <- WilliamsDesign(nbP)

tab <- cbind(prod=as.vector(t(wdes_5P10J)), judge=rep(1:nbJ,each=nbR), rank=rep(1:nbR,nbJ))

optdes_5P13J <- optFederov(~prod+judge+rank, data=tab, augment=TRUE, nTrials=nbJ*nbP, rows=1:(nbJ*nbP), nRepeats = 100)

xtabs(optdes_5P13J$design)## rank

## judge 1 2 3 4 5

## 1 4 2 5 3 1

## 2 2 3 4 1 5

## 3 3 1 2 5 4

## 4 1 5 3 4 2

## 5 5 4 1 2 3

## 6 1 3 5 2 4

## 7 5 1 4 3 2

## 8 4 5 2 1 3

## 9 2 4 3 5 1

## 10 3 2 1 4 5

## 11 4 2 5 3 1

## 12 2 3 4 1 5

## 13 3 1 2 5 4In the code above, xtabs() is used to arrange the design in a table format that is convenient for the experimenter.

Note that it would also be possible to start from an optimal design and expand it to add one judge at a time. The code below first builds a design for 5 products and 13 judges and then adds one judge to make the design optimal for 5 products and 14 judges.

nbJ=13

nbP=5

nbR=nbP

optdes_5P13J <- optimaldesign(nbP, nbP, nbR)$design

tab <- cbind(prod=as.vector(t(optdes_5P13J)),judge=rep(1:nbJ,each=nbR),rank=rep(1:nbR,nbJ))## Warning in cbind(prod = as.vector(t(optdes_5P13J)), judge = rep(1:nbJ, each =

## nbR), : number of rows of result is not a multiple of vector length (arg 1)add <- cbind(prod=rep(1:nbP,nbR),judge=rep(nbJ+1,nbP*nbR),rank=rep(1:nbR,each=nbP))

optdes_5P14J <- optFederov(~prod+judge+rank,data=rbind(tab,add), augment=TRUE, nTrials=(nbJ+1)*nbP,

rows=1:(nbJ*nbP), nRepeats = 100)8.1.3 Balanced incomplete block designs (BIBD)

Sensory and consumer scientists may sometimes consider using incomplete designs, i.e. experiments in which each judge evaluates only a subset of the complete product set (Wakeling and MacFie 1995). In this case, the number of samples evaluated by each judge remains constant but is lower than the total number of products included in the study.

You might want to choose this approach for example if you want to reduce the workload for each panelist and limit sensory fatigue, boredom and inattention. It might also be useful when you cannot “afford” a complete design because of sample-related constraints (limited production capacity, very expensive samples, etc.). The challenge then, is to balance sample evaluation across the panel as well as the context (i.e. other samples) in which each sample is being evaluated. For such a design you thus want each pair of products to be evaluated together the same number of times.

The optimaldesign() function of {SensoMineR} can be used to search for a Balanced Incomplete Block Design (BIBD). For instance, let’s imagine that 10 panelists are evalauting 3 out of 5 possible samples. The design can be defined as following:

incompDesign1 <- optimaldesign(nbPanelist=10, nbProd=5, nbProdByPanelist=3)

incompDesign1$design## Rank 1 Rank 2 Rank 3

## Panelist 1 1 4 3

## Panelist 2 4 3 2

## Panelist 3 1 5 4

## Panelist 4 4 3 5

## Panelist 5 3 5 1

## Panelist 6 2 4 5

## Panelist 7 2 1 4

## Panelist 8 5 2 3

## Panelist 9 5 1 2

## Panelist 10 3 2 1BIBD are only possible for certain combinations of numbers of treatment (products), numbers of blocks (judges), and block size (number of samples per judge). Note that optimaldesign() will yield a design even if it is not balanced but it will also generate contingency tables allowing you to evaluate the design’s orthogonality, and how well balanced are order and carry-over effects.

You can also use the {crossdes} package to generate a BIBD with this simple syntax: find.BIB(trt, b, k, iter), with trt the number of products (here 5), b the number of judges (here 10), k the number of samples per judge (here 3), and iter the number of iteration (30 by default). Furthermore, the isGYD() functions evaluates whether the incomplete design generated is balanced or not. If the design is a BIBD, you may then use williams.BIB() to combine it with a Williams design to balance carry-over effects.

library(crossdes)

incompDesign2 <- find.BIB(trt=5, b=10, k=3)

isGYD(incompDesign2)

williams.BIB(incompDesign2)Incomplete balanced designs also have drawbacks. First, from a purely statistical perspective, they are conducive to fewer observations and thus to a lower statistical power. Product and Judge effects are also partially confounded even though the confusion is usually considered as acceptable.

8.1.4 Incomplete designs for hedonic tests: Sensory informed designs

One may also be tempted to use incomplete balanced block designs for hedonic tests. However, proceeding this way is likely to induce framing bias. Indeed, each participant will only see part of the product set which would affect their frame of reference if the subset of product they evaluate only covers a limited area of the sensory space.

Suppose you are designing a consumer test of chocolate chip cookies in which a majority of cookies are made with milk chocolate while a few cookies are made with dark chocolate chips. If a participant only evaluates samples that have milk chocolate chips, this participant will not know about the existence of dark chocolate and will potentially give very different scores compared to what they would have if they had a full view of the product category.

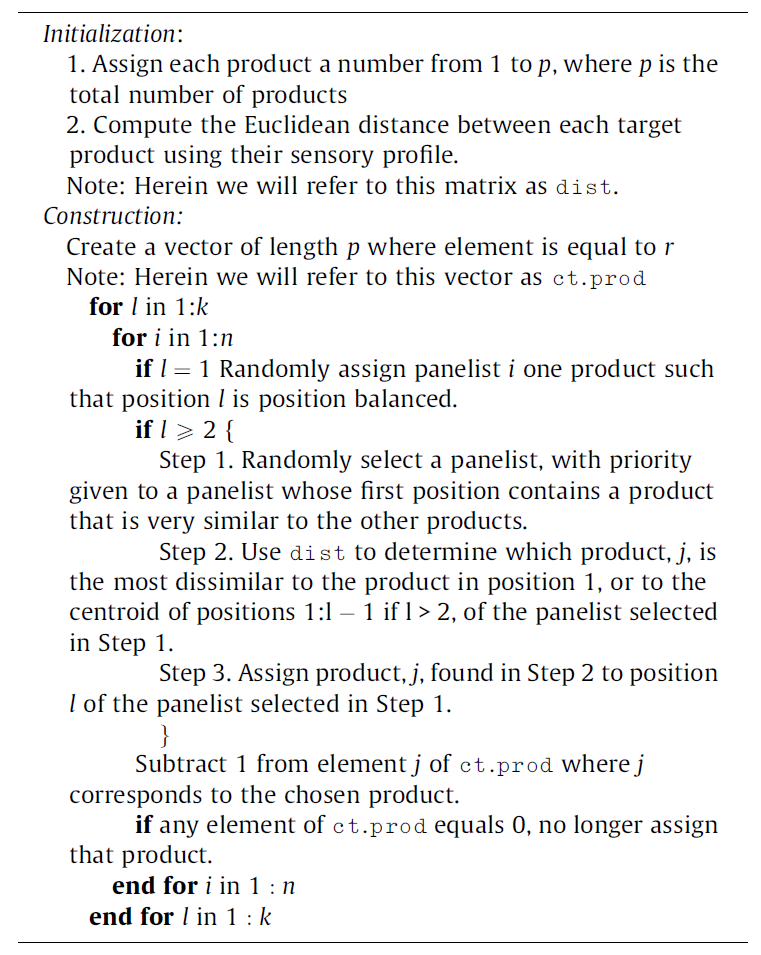

To reduce the risks incurred by the use of BIBD, an alternative strategy is to use a sensory informed design. Its principle is to allocate each panelist a subset of products that best cover the sensory diversity of the initial product set. Pragmatically, this amounts to maximizing the sensory distance between drawn products (Franczak et al. 2015). Of course, this supposes that one has sensory data to rely on in the first place.

8.3 Execute

Sir Ronald Fisher famously said in his presidential address to the first Indian statistical congress (1938): “To consult the statistician after an experiment is finished is often merely to ask him to conduct a post-mortem examination. He can perhaps say what the experiment died of.”

Hopefully, the sections above would have helped the sensory and consumer scientist designing their experiment in a way that would warrant them relevant and meaningful data that are obtained with maximum efficiency.

Fisher continues: “To utilise this kind of experience the statistician must be induced to use his imagination, and to foresee in advance the difficulties and uncertainties with which, if they are not foreseen, his investigations will be beset.” Fortunately, we can spare the reader some of these imagination efforts and reiterate the fundamental principles of sensory evaluation that should help avoiding major pitfalls^[For a more detailed description of these principles, we refer the reader to comprehensive sensory evaluation textbooks. See for instance (Lawless and Heymann 2010, (Civille and Carr, 2016) or (Stone, Bleibaum and Thomas, 2022)).

Individual evaluation

Probably the most important requirement for the validity of sensory measurements is to perform individual evaluation. Sensory responses are very easily biased when judges can communicate. When this happens, observations cannot be considered independent which would rule out most statistical tests. Although this principle is generally accepted and correctly applied, some situations may be more challenging in this regard (such as project team meetings, b2b sample demonstration, tasting events, etc.). Individual evaluation is usually ensured by the use of partitioned sensory booths, but it can also be achieved by other means (table-top partitions, curtains, separate tables, separate rooms). There are some cases, in consumer research, where interactions between subjects are allowed or even encouraged because they correspond to real-life situations. But these are exceptions to the rule, and in such cases, observations are to be considered at the group level.Balanced order effects and treatments

We already discussed the importance of balancing the evaluation order for first-order and carry-over effects (section 8.1.2). We cannot overstate how necessary this precaution is to get valid data. On top of having to deal with such effects, sensory scientists sometimes want to test how products are perceived (or liked) under different conditions (e.g. blind vs. branded, with/without nutritional information, in the lab vs. at home, etc.). Choice must then be made between a within-group design (in which participants evaluate the products under the different conditions) and a between-group design (in which participants evaluate the product under one condition only). As often in consumer science, there is no perfect experiment and these two options have pros and cons. For instance, the within-group design would be more powerful and would allow data analysis at the individual level, but it would be more likely to induce response biases. Note that in both cases, participants must be randomly assigned to one group (corresponding either to a given condition, or to the order in which each condition is being experienced if the study follows a within-group design).Blind evaluation and controlled evaluation conditions

The primary goal of most sensory tests is to measure panelists’ responses based on sensory properties only, without the interference of other variables that are seen as sources of potential biases. For this reason, tests are most frequently conducted on a blind-labeled basis without any information regarding the samples being tested (product identity, brand, price, nutritional facts, claims, etc.). Samples are thus usually blind labeled with random three-digit codes. This way, focus is placed on sensory perception and not on memory or expectations. Even information about the presence of duplicates or about the total number of products included in the design could induce biases. However it is not always possible to hide all information (for example when the brand is printed directly on the product). It should also be noted that information is sometimes included as part of the study design to precisely evaluate the effect of that information. Besides, when sensory evaluation is used for market research goals, evaluation of the full mix can be preferred.

Along the same lines and always in the view of collecting accurate and repeatable data, sensory scientists strive to control evaluation conditions. Sensory booths serve this purpose as they allow individual evaluation under controlled and standardized conditions. Nevertheless, for consumer tests (especially for hedonic tests), researchers may value the role of context in judgement construction and decision making, and thus seek to contextualize their experimental setup for gains in ecological validity (REF REF REF Galinanes Plaza).38Separate affective from analytical tasks

For sensory evaluation, a clear distinction is usually made between analytical measurements (whereby emphasis is placed on description of sample characteristics or on differences and similarities between samples) and affective measurements (whereby focus is placed on liking, preferences, and emotions that may derive from the consumption of a product). Because the tasks involved in these two types of measurements are very different, the general recommendation is to conduct them separately (and most often, with different people). Proceeding otherwise would risk inducing cognitive biases and collecting skewed - or even meaningless - data. For example, if the goal of a study is to measure how much consumers like a given set of food products, it wouldn’t make sense to ask trained panelists to rate their liking for the products they have been trained to describe. They can certainly do it, but their judgement of the products is likely to be changed by that training and by their extensive exposure to the product. Therefore, they can no longer be considered normal consumers. This is relatively commonsense. However, the risk of biases can sometimes be more subtle. Indeed, it might be tempting to ask consumers to give their liking for samples and, within the same session, to describe the same samples for a number of attributes. By doing so, you risk changing participants’ mindset (e.g. by over-focusing on specific attributes) and thus altering liking scores (REF REF). There is much debate though about which type of descriptive tasks would actually lead to biased responses (REF REF). With this in mind, experimenters might still consider conducting combined measurements for product optimization, especially to get rough estimates of product specifications to target in the first stage of product development. In this objective, Just-about-right (JAR) scales or the Ideal Profile Method (IPM) are very popular tasks. They provide a very direct way to optimize products’ sensory characteristics (REF REF). Alternately, one might expect that ‘untrained’ consumers cannot be used for descriptive analysis. However in the past few decades, the development of descriptive methods that do not require training and that can be achieved in a single session has made consumer-based descriptive analysis possible, reliable, and accepted (REF REF).Sample availability

An obvious, but essential, condition for conducting sensory evaluation, is to have samples available for testing. It is surprising to see how many sensory studies fail simply because the experimenters have not anticipated the production of experimental samples in sufficient quantities or procurement of commercial products. Especially, remember that for many sensory tests, samples are needed for training in addition to the evaluation itself. No data analysis can make up for a lack of samples, no matter how sophisticated it may be. We therefore strongly advise experimenters to review their need for samples when they design a study and, if they do not make the samples themselves, to discuss with their clients or project teams (R&D, pilot plant, suppliers, etc.) to ensure that samples will be available over the course of the study.

Regulations for studies with human subjects Running a sensory or a consumer study implies working with human subjects at some point (online surveys and simple passive observation count!). Therefore, experimenters must ensure that their protocol complies with local and international rules. Most often, research projects should be approved by an Institutional Review Board (IRB) or an appropriate ethical committee. As far as data are concerned, it is also important to ensure that data collection, use, and storage comply with applicable regulations such as EU’s General Data Protection Regulation (GDPR), or the California Consumer Privacy Act (CCPA)).

Quantification

Finally, it is critical that sensory and consumer scientists anticipate what type of analysis they will conduct in accordance with the exact information they are looking for and thus define what data type and scaling method they will adopt[SEE BOOK O’MAHONY // BOOK LAWLESS HEYMANN]. The means of quantification (counts, sorting, ranking, scaling, mapping, reaction time, etc.) has usually been set long before execution, when the study was designed. When time comes to run the tests, the experimenter will have to rely on a proper and reliable way to collect data. Nowadays, commercial sensory software solutions allow to collect any type of data, including temporal information. However, in some cases, the experimenter may choose to ask panelists to use paper and pencil, or just to give a verbal answer, or to arrange the samples physically on a bench. Care must then be taken to ensure proper coding scheme and data entry. At this stage, it is important to keep as much information as possible on the experimental details, such as who evaluated which sample, in which order, at what time, etc. It is usually advised to try to enter data in a single spreadsheet with one column per variable and one line per observation, but in some rare cases, it might be more convenient to enter each panelist’s data in separate tabs. This could be the case, for example for methods like free sorting or napping. Note that entering data is prone to mistakes and typos, especially when entered manually into a spreadsheet. In the next sections we will see how to import data from that spreadsheet into R (Section 8.4) and how to check for outliers and missing values (Section 9).

8.4 Import

It is a truism, but to analyze data we first need data. If this data is already available in R, then the analysis can be performed directly. However, in most cases, the data is stored outside the R environment, and needs to be imported.

In practice, the data might be stored in as many format as one can imagine, whether it ends up being a fairly common solution (.txt file, .csv file, or .xls(x) file), or software specific (e.g. Stata, SPSS, etc.).

Since it is very common to store the data in Excel spreadsheets (.xls(x)) due to its simplicity, the emphasis is on this solution. Fortunately, most generalities presented for Excel files also apply to other formats through base::read.table() for .txt files, base::read.csv() and base::read.csv2() for .csv files, or through the {read} package (which is part of the {tidyverse}).

For other (less common) formats, you may find alternative packages that would allow importing your files in R. Particular interest can be given to the package {rio} (rio stands for R Input and Output) which provides an easy solution that:

- Handles a large variety of files,

- Guess the type of file it is,

- Provides tools to import, export, and convert almost any type of data format, including .csv, .xls(x), or data from other statistical software such as SAS (.sas7bdat and .xpt), SPSS (.sav and .por), or Stata (.dta).

Similarly, the package {foreign} provides functions that allow importing data stored from other statistical software (incl. Minitab, S, SAS, Stata, SPSS, etc.).

Although Excel is most likely one of the most popular way of storing data, there are no {base} functions that allow importing such files directly. Fortunately, many packages have been developed for that purpose, including {XLConnect}, {xlsx}, {gdata}, and {readxl}. Due to its convenience and speed of execution, we will focus on {readxl}.

8.4.1 Importing Structured Excel File

First, let’s import the biscuits_sensory_profile.xlsx workbook using readxl::read_xlsx() by informing as parameter the location of the file and the sheet where it is stored. For convenience, we are using the {here}39 package to retrieve the path of the file (stored in file_path).

This file is called structured as all the relevant information is already stored in the same sheet in a structured way. In other words, no decoding is required here, and there are no ‘unexpected’ rows or columns (e.g. empty lines, or lines with additional information regarding the data that is not data):

- The first row within the Data sheet of biscuits_sensory_profile.xlsx contains the headers;

- From the second row onward, only data is being stored.

Since this data will be used for some analyses, it is assigned data to an R object called sensory.

library(here)

file_path <- here("data","biscuits_sensory_profile.xlsx")

library(readxl)

sensory <- readxl::read_xlsx(file_path, sheet="Data")To ensure that the importation went well, it is advised to print sensory after importation. Since {readxl} has been developed by Hadley Wickham and colleagues, its functions follow the {tidyverse} principles and the data thus imported is stored in a tibble. Let’s take advantage of the printing properties of a tibble to evaluate sensory:

sensory## # A tibble: 99 x 34

## Judge Product Shiny `External color inten~` `Color evenness` `Qty of inclus~`

## <chr> <chr> <dbl> <dbl> <dbl> <dbl>

## 1 J01 P01 52.8 30 22.8 9.6

## 2 J01 P02 48.6 30 13.2 10.8

## 3 J01 P03 48 45.6 17.4 7.8

## 4 J01 P04 46.2 45.6 37.8 0

## 5 J01 P05 0 23.4 49.2 0

## 6 J01 P06 0 50.4 24 27.6

## 7 J01 P07 5.4 6.6 17.4 0

## 8 J01 P08 0 51.6 48.6 23.4

## 9 J01 P09 0 42.6 18 21

## 10 J01 P10 53.4 36.6 11.4 18

## # ... with 89 more rows, and 28 more variables: `Surface defects` <dbl>,

## # `Print quality` <dbl>, Thickness <dbl>, `Color contrast` <dbl>,

## # `Overall odor intensity` <dbl>, `Fatty odor` <dbl>, `Roasted odor` <dbl>,

## # `Cereal flavor` <dbl>, `RawDough flavor` <dbl>, `Fatty flavor` <dbl>,

## # `Dairy flavor` <dbl>, `Roasted flavor` <dbl>,

## # `Overall flavor persistence` <dbl>, Salty <dbl>, Sweet <dbl>, Sour <dbl>,

## # Bitter <dbl>, Astringent <dbl>, Warming <dbl>, ...sensory is a tibble with 99 rows and 35 columns that includes the Judge information (first column, defined as character), the Product information (second column, defined as character), and the sensory attributes (third column onward, defined as numerical or dbl).

8.4.2 Importing Unstructured Excel File

In some cases, the data are not so well organized/structured, and may need to be decoded. This is the case for the workbook entitled biscuits_traits.xlsx.

In this file:

- The variables’ name have been coded and their corresponding names (together with some other valuable information we will be using in Section 10) are stored in a different sheet entitled Variables;

- The different levels of each variable (including their code and corresponding names) are stored in another sheet entitled Levels.

To import and decode this data set, multiple steps are required:

- Import the variables’ name only;

- Import the information regarding the levels;

- Import the data without the first line of header, but by providing the correct names (obtained in the step 1.);

- Decode each question (when needed) by replacing the numerical code by their corresponding labels.

Let’s start with importing the variables’ names from biscuits_traits.xlsx (sheet Variables)

file_path <- here("data","biscuits_traits.xlsx")

var_names <- readxl::read_xlsx(file_path, sheet="Variables")## # A tibble: 62 x 5

## Code Name Direction Value `Full Question`

## <chr> <chr> <chr> <dbl> <chr>

## 1 Q1 Living area <NA> NA <NA>

## 2 Q2 Housing <NA> NA <NA>

## 3 Q3 Judge <NA> NA <NA>

## 4 Q4 Height <NA> NA <NA>

## 5 Q5 Weight <NA> NA <NA>

## 6 Q6 BMI <NA> NA <NA>

## 7 Q7 Marital status <NA> NA <NA>

## 8 Q8 Household size <NA> NA <NA>

## 9 Q9 Income range <NA> NA <NA>

## 10 Q10 Occupation <NA> NA <NA>

## # ... with 52 more rowsIn a similar way, let’s import the information related to the levels of each variable, stored in the Levels sheet.

A deeper look at the Levels sheet shows that only the coded names of the variables are available. In order to include the final names, var_names is joined (using inner_join).

var_labels <- readxl::read_xlsx(file_path, sheet="Levels") %>%

inner_join(dplyr::select(var_names, Code, Name), by=c(Question="Code"))## # A tibble: 172 x 4

## Question Code Levels Name

## <chr> <dbl> <chr> <chr>

## 1 Q1 1 Urban Area Living area

## 2 Q1 2 Rurban Area Living area

## 3 Q1 3 Rural Area Living area

## 4 Q2 1 Apartment Housing

## 5 Q2 2 House Housing

## 6 Q7 1 Divorced Marital status

## 7 Q7 2 Married Marital status

## 8 Q7 3 Conjugal Marital status

## 9 Q7 4 Single Marital status

## 10 Q7 5 Civil Partnership Marital status

## # ... with 162 more rowsUltimately, the data (Data) is imported by substituting the coded names with their corresponding names. This process can be done by skipping reading the first row of the data that contains the coded header (skip=1), and by passing Var_names as header or column names (after ensuring that the names’ sequence perfectly match across the two tables!).

Alternatively, you can import the data by specifying the range in which the data is being stored (here `).

The data has now the right headers, however each variable is still coded numerically. This step to convert the numerical values with their corresponding labels is described in Section 9.

It can happen that the data include extra information regarding the levels of a factor as sub-header. In such case, a similar approach should be used: 1. Start with importing the first n rows of the data that contain this information using the parameter

n_maxfrom `. 2. From this subset, extract the column names. 3. For each variable (when information is available), store the additional information as a list of tables that contains the code and their corresponding label. 4. Re-import the data by skipping these n rows, and by manually informing the headers.

8.4.3 Importing Data Stored in Multiple Sheets

It can happen that the data that need to be analyzed is stored in different files, or in different sheets within the same file. Such situation could happen if the same test involving the same samples is repeated over time, or has been run simultaneously in different locations, or simply for convenience for the person who manually collected the data.

Since the goal here is to highlight the possibilities in R to handle such situations, we propose to use a small fake example where 12 panelists evaluated 2 samples on 3 attributes in 3 sessions, each session being stored in a different sheet in excel_scrap.xlsx.

A first approach to tackle this problem could be to import each file separately, and to combine them together using the bind_rows() function from the {dplyr} package. However, this solution is not optimal since it is very tedious when a larger number of sheets is involved, and it is not automated since the code will no longer run (or be incomplete) when the number of session changes.

Instead, we prefer to fully automate the importation. To do so, let’s first introduce excel_sheets() from {readxl}: this function provides the name of all the sheets that are available in the file of interest in a list. Then, through map() from the {purrr} package, we apply read_xlsx() to all the elements one by one of obtained with excel_sheets().

path <- file.path("data", "excel_scrap.xlsx")

files <- path %>%

excel_sheets() %>%

set_names(.) %>%

map(~readxl::read_xlsx(path, sheet = .))As can be seen, this procedure creates a list of tables, with as many elements are there are sheets in the excel file.

As an alternative, consider using

import_list()from{rio}as it imports automatically all the sheets from a spreadsheet with one single command.

To convert this list of data tables into one unique data frame, we first extend the previous code and enframe() it by informing that the separation was based on Session. Once done, the data (stored in data) is still nested in a list, and should be unfolded. Such operation is done with the unnest() function from {tidyr}:

files %>%

enframe(name = "Session", value = "data") %>%

unnest(cols = c(data))## # A tibble: 72 x 6

## Session Subject Sample Sweet Sour Bitter

## <chr> <chr> <chr> <dbl> <dbl> <dbl>

## 1 Session 1 J1 P1 46.6 82.6 25.5

## 2 Session 1 J2 P1 1.28 60.1 13.9

## 3 Session 1 J3 P1 29.1 48.5 62.8

## 4 Session 1 J4 P1 29.9 79.2 52.7

## 5 Session 1 J5 P1 56.6 71.4 17.5

## 6 Session 1 J6 P1 45.7 43.1 64.8

## 7 Session 1 J7 P1 93.7 1.16 62.9

## 8 Session 1 J8 P1 33.6 59.7 96.0

## 9 Session 1 J9 P1 29.6 45.5 58.2

## 10 Session 1 J10 P1 3.57 48.1 59.0

## # ... with 62 more rowsThis procedure finally returns a tibble with 72 rows and 6 columns, ready to be analyzed!

Few additional remarks regarding the last set of code: 1. Instead of

enframe(), we could have usedreduce()from{purrr}, ormap()combined withbind_rows(). However, both these solutions have the drawbacks that the information regarding theSessionwould be lost since it is not part of the data set itself. 2. The functionsenframe()andunnest()have their alter-ego indeframe()andnest()which aim in transforming a data frame into a list of tables, and in nesting data by creating a list-column of data frames. 3. In case the different sets of data are stored in different excel files (rather than different sheets within a file), we could apply a similar procedure by usinglist.files()(instead ofexcel_sheets()) from the{base}package, together withpattern = "xlsx"to limit the search to Excel files present in a pre-defined folder. Such solution becomes handy when many similarly structured files are stored in the same folder and need to be combined.

Bibliography

Market researchers would argue that evaluating several products in a row doesn’t usually happen in real life and that proceeding this way may induce response biases. They thus advocate the use of pure monadic designs in which participants are only given one sample to evaluate. This corresponds to a between-group design that is also frequently used in fields where only one treatment per subject is possible (drug testing, nutrition studies, etc.).↩︎

Sensory and consumer research facilities such as living labs or immersive spaces are used in efforts to better account for the role of context without compromising on control. For more information on this topic, see REF REF REF Meiselman’s book on context↩︎

The package

{here}is very handy as it provides an easy way to retrieve your file’s path (within your working directory) by simply giving the name of the file and folder in which they are stored in.↩︎